Schema Pruning for Text-to-SQL: 93% Less Context, Zero LLM Calls

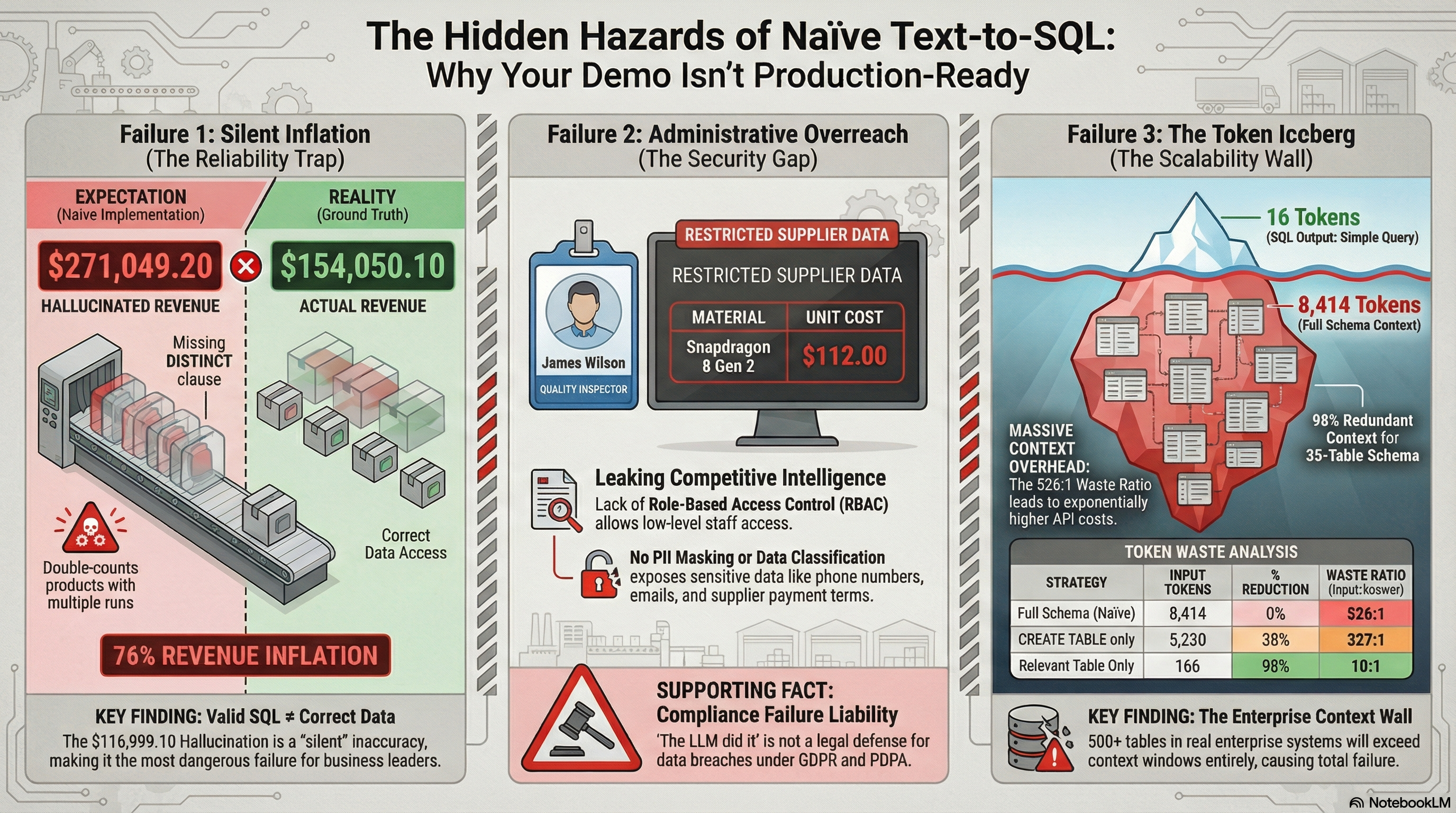

In Part 1, the naïve Text-to-SQL approach sent 8,414 tokens of schema context to generate 16 tokens of SQL - a 526:1 input-to-output ratio. This post engineers the fix: a deterministic schema pruner - context engineering at the schema layer - that selects only the tables relevant to each query, with no LLM dependency.

Continue reading ...